Multi-passage Preliminary Results

Multiple Passages Results

Last week we experimented with extending the BiDAF model to take in multiple passages, and have an attention mechanism across those passages. Unfortunately, we did not get the results we were hoping for. This week we went ahead and implemented an argmax approach.

In more detail, we sent each passage through the answer extractor individually, and grabbed the probability of the most likely start and end indexes. We then multiplied them together along with the tf-idf similarity between that passage and the query. After doing this for all the passages, we choose the answer that had the highest product of the terms just described.

One benefit of this approach over the larger models with attention over the passages is that we can train the answer extractor on it’s own, and then switch them in and out of the argmax framework for testing. In addition, it is a much simpler model and therefore is easier to train.

Trying the argmax approach with all of our previous models on the location dataset, we get the following results:

| Baseline | Attention | Coattention (Bug) | BiDAF | |

|---|---|---|---|---|

| Bleu-1 | 0.292 | 0.301 | 0.129 | 0.302 |

| Rouge-L | 0.286 | 0.297 | 0.228 | 0.291 |

Here are the results with the attention answer extractor on the different answer types:

| Description | Numeric | Entity | Location | Person | |

|---|---|---|---|---|---|

| Bleu-1 | 0.298 | 0.215 | 0.201 | 0.301 | 0.240 |

| Rouge-L | 0.298 | 0.330 | 0.205 | 0.297 | 0.287 |

Error Analysis

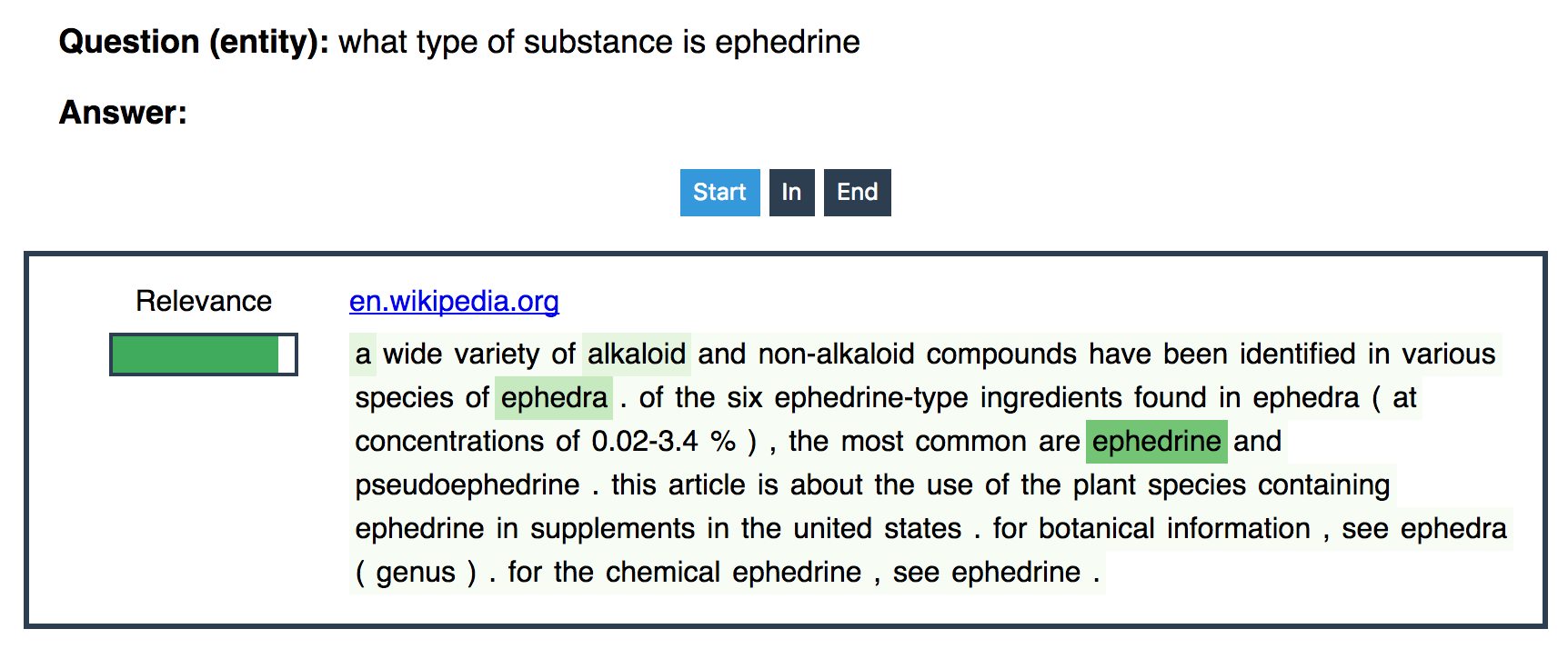

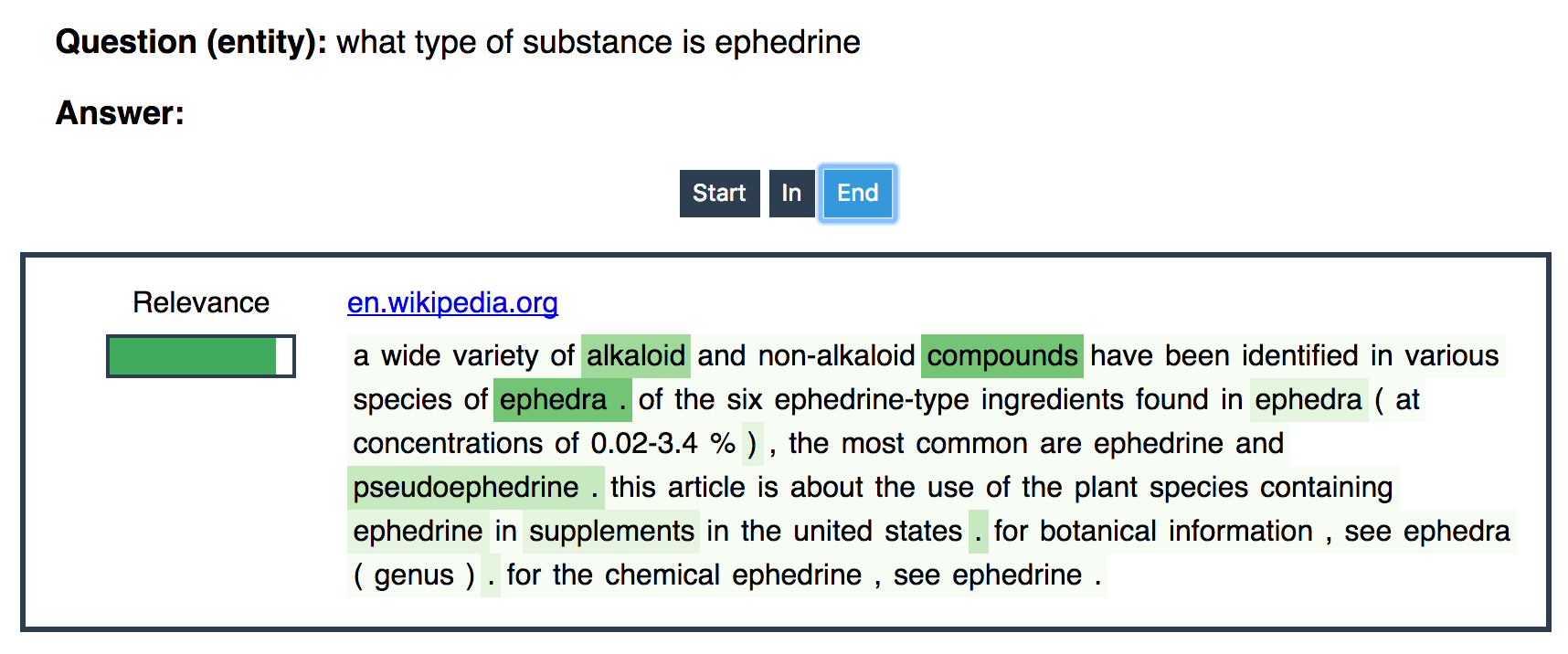

The demo has made error analysis much easier, since we can now easily see the relevance of passages as well as the start of answer and end of answer probabilities for each word.

No Answer

Our model has a problem with occasionally predicting zero for both the start and end indices, which yields no answer. This seems to happen with about 5% of questions, which could have a significant impact on our Bleu and Rouge scores. It seems that the model predicts no answers when the most likely end token appears before the most likely start token. We should be able to fix this without too much trouble.

Poor Numeric Answers

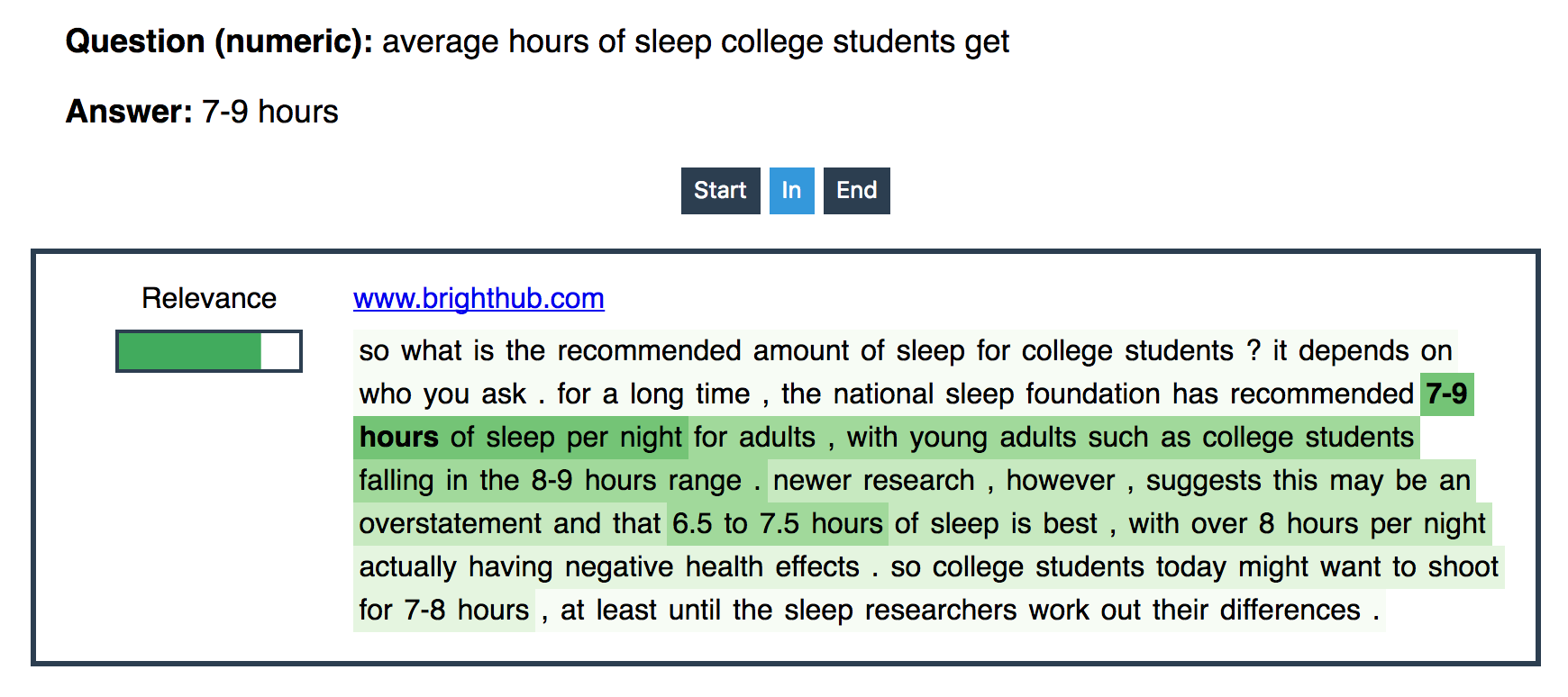

Numeric answers represent about a quarter of the dataset. Our answers for numeric questions aren’t quite as good as they are for other answer types. I think that this stems from the word embeddings that we are using. While some numbers are representing in the GloVe embeddings, most are just treated as unknowns. We could do something much smarter here. Simply using a special “number” symbol would probably work better than unknown. We could also have a separate symbol for each unit. This is low hanging fruit that could improve our numeric scores substantially.

Lack of Comprehension

For some questions, our model provides plausible but incorrect answers. For the question below, the true answer is “8-9 hours”, but our answer is “7-9 hours”. We predict a number at least, but some of the nuance is not understood. Right now we have only seen the demo on our attention model, but it would be interesting to see how other models do in situations like this.

Areas For Improvement

With the exception of the coattention model, which we believe we introduced a bug to at some point, the results are much better than last week’s. It was interesting that there was not much variation in results for the different answer extractors. This leads us to believe that we can benefit the most at this point by improving how we select the answers after they are fed through the answer extractor. This may mean weighting the passages differently, and accounting for the different lengths of passages. In addition to this, hyper-parameter tuning should be able to boost the results at least a bit as well.

Demo Work

A lot of our time in the past week had been devoted to our presentation and demo. The demo is available here. We ran our attention model on the MS MARCO testing dataset to produce answers for the demo. Unfortunately, we could not extract answers for description questions due to time constraints and bugs in our code, but we will have these for the final demo.

Right now our demo is limited to questions in the test dataset. Extending it to free-form input would be very interesting, but outside of the scope of this project, since it would require scraping Bing/Google and then extracting context passages from webpages.